The Agentic Operating Model: How AI Agents Actually Work Inside Real Organizations

10 min read

10 min read Jan 02, 2026

Jan 02, 2026

Agentic AI marks the most radical alteration in organizational structure since the industrial and digital revolutions. These AI systems can analyze data, make autonomous decisions, and execute actions within systems without human intervention.

Gartner projects that enterprise software applications with agentic AI capabilities will reach 33% by 2028, up from barely 1% in 2024. Organizations have embraced this rapid adoption because of measurable gains in speed, operational throughput, and decision quality. A European utility provider demonstrated these benefits by deploying a multimodal AI assistant that serves three million customers, which substantially cut handling times and improved customer satisfaction.

Business results paint an impressive picture. Agentic AI will autonomously handle 80% of common customer service queries by 2029, leading to a 30% drop in operational costs. The path ahead remains challenging - Gartner predicts that more than 40% of agentic AI projects will fail by late 2027 due to mounting costs, unclear business value, or inadequate risk controls.

This piece explores the unique aspects of agentic AI compared to generative AI and traditional automation. Readers will learn how it reshapes enterprise operating models and what leaders must understand about governance, risk management, and the future of work in an agentic organization.

What is Agentic AI and How Does It Differ from Traditional Automation?

Agentic AI vs Generative AI: Key Differences

Large language models (LLMs) power both generative AI and agentic AI, but they serve different purposes. The main difference lies in how they work:

| Characteristic | Agentic AI | Generative AI |

|---|---|---|

| Core Function | Executes multistep tasks autonomously | Creates content based on prompts |

| Autonomy Behavior | High - operates independently toward objectives | <citation index='30' link='https://www.thomsonreuters.com/en/insights/articles/agentic-ai-vs-generative-ai-the-core-differences' similar_text='' |

| Behavior | Proactive - initiates actions based on goals | Reactive - responds only to prompts |

| Primary Value | Workflow automation and complex problem-solving | <citation index='30' link='https://www.thomsonreuters.com/en/insights/articles/agentic-ai-vs-generative-ai-the-core-differences' similar_text='' |

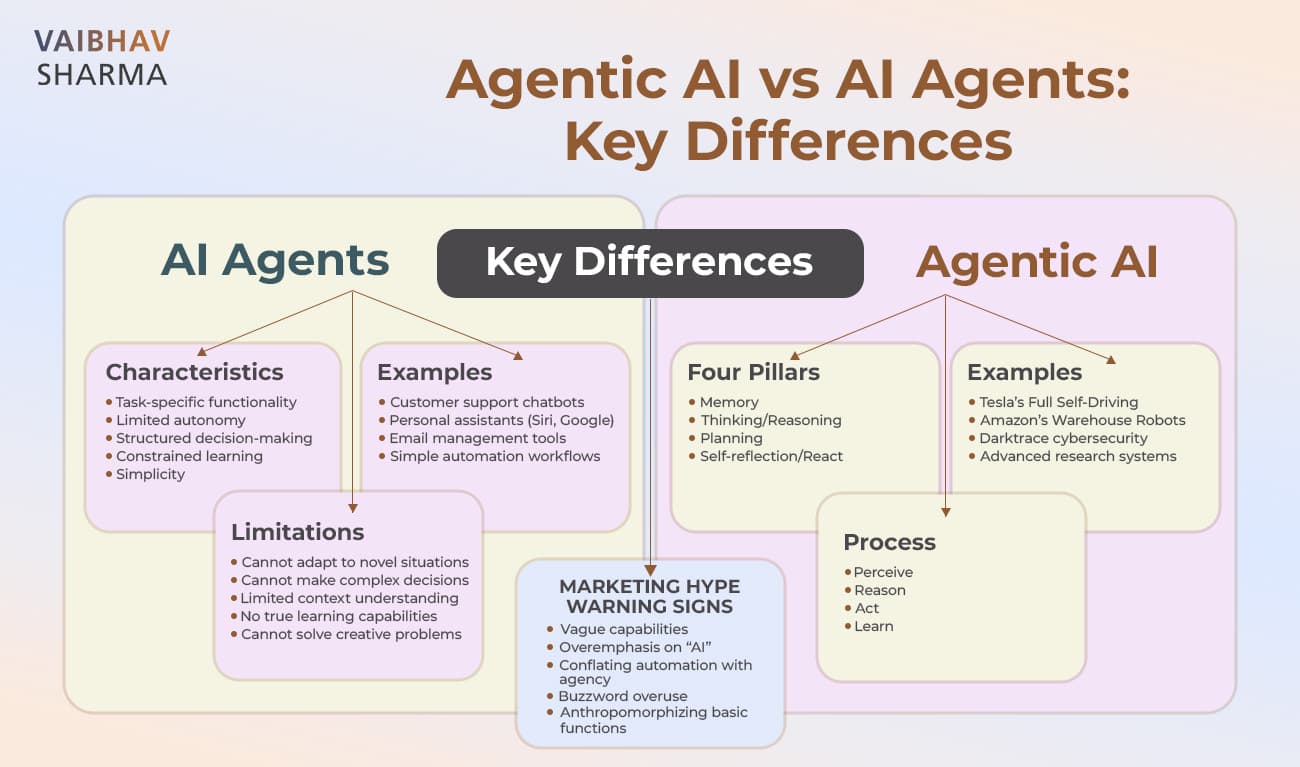

Agentic AI Definition and Core Capabilities

Agentic AI systems work on their own to reach specific goals with minimal oversight. These systems see their surroundings, understand context, make choices, and act with little human guidance.

- Memory - Both short-term session state and long-term vectorized stores

- Planning - Breaking down goals into ordered, replannable subtasks

- Tool Use - Safe interaction with enterprise systems through formal adapters

- Reasoning - Combining LLM-driven chain-of-thought with external verification

Agentic systems work through an observe-plan-act-refine cycle. This helps them gather information, accomplish tasks, and get better over time.

How Agentic Workflows Enable Autonomy and Adaptation

Static decision trees drive traditional automation - when X happens, do Y. Agentic workflows use adaptive policies instead of rigid rules. They take in data from many sources, study problems, develop plans, and complete tasks.

- Live responses to changing environments and data

- Complex problem-solving through repeated attempts

- Self-improvement through feedback and continuous learning

Agentic workflows help AI agents handle complex tasks quickly in organizations. This leads to better efficiency, growth potential, and smarter decisions.

How Agentic AI Reshapes the Enterprise Operating Model

AI-First Workflows and Human-in-the-Loop Oversight

AI-first workflows turn traditional automation approaches upside down. They reimagine processes with AI agents as primary executors while humans provide strategic oversight. Traditional automation just speeds up existing processes. However, AI-first workflows can boost improvements by 90% or more when built from the ground up. To name just one example, see how EY Consulting cut down a process from 44 hours to 45 minutes by rebuilding it around AI capabilities.

- Accuracy and Reliability - Humans check outputs and prevent errors from causing harm

- Ethical Decision-Making - Humans set up alerts and safeguards for high-stakes decisions

- Transparency - HITL creates audit trails that support legal defense and compliance reviews

So, effective HITL works like cruise control in a car—AI handles routine operations while humans stay ready to step in when needed.

Flat Agentic Teams vs Traditional Hierarchies

Flat networks of outcome-focused agentic teams are taking the place of traditional organizations built around functional silos. The structural changes are significant:

| Traditional Hierarchy | Flat Agentic Organization |

|---|---|

| Functional silos | Outcome-focused teams |

| Size limited by human coordination | 2-5 humans can supervise 50-100 specialized agents |

| Sequential handoffs | Parallel agent processing |

| Role-based organization | Objective-based organization |

Agentic Commerce and Cross-Functional Collaboration

Agentic commerce brings a key change where AI agents spot early purchase intent and create detailed shopping plans proactively. Brands must establish their presence at the point of need rather than competing at the comparison stage.

- Customer-facing shopping assistants

- AI-driven workforce agents that boost employee productivity

- Operational agents that make e-commerce functions efficient

The end result is an AI-first organizational culture. Humans set strategic vision while AI agents handle tasks and break down traditional department barriers.

Governance and Risk Management in Agentic Organizations

Real-Time Guardrails with Embedded Control Agents

Runtime guardrails act like circuit breakers for AI systems. They kick in right when actions happen instead of waiting for problems to surface. These safety measures cover everything from datasets to models, applications, and workflows. The goal is to make sure AI outputs stay reliable.

Key types of runtime guardrails include:

| Guardrail Type | Function | Risk Mitigated |

|---|---|---|

| Identity & Access | Control who agents can act as | Unauthorized access, impersonation |

| Data Sensitivity | Prevent exposure of sensitive information | Compliance violations, data leaks |

| Action Authorization | Monitor high-impact operations | Irreversible system changes |

| Behavioral Safety | Filter harmful content | Toxic outputs, hallucinations |

Agent Lifecycle Management: From Initiation to Decommissioning

The agent's journey in an organization needs careful management from start to finish. Security policies guide the initial setup of configurations and operational parameters. The next step puts agents in their proper environments where teams monitor their health, performance, and compliance continuously.

Agents might need updates or new assignments based on what the business needs. Role-based access controls keep their actions in check. The journey ends with proper retirement - archiving or deleting data according to retention policies.

Human Accountability in Autonomous Decision-Making

Humans stay responsible even when AI works independently. Legal experts across jurisdictions agree that people and companies who create, deploy, or oversee AI should be held accountable. In spite of that, complex systems can create gaps where nobody wants to take responsibility.

Organizations should build clear chains of accountability that cover the entire AI system lifecycle to close these gaps. Keeping detailed records of agent decisions helps maintain proper oversight. Regular monitoring and compliance checks play a vital role too.

Want to set up proper AI governance in your organization? with our experts who will help create a framework that balances innovation with responsible AI use.

The Future of Work: Skills, Culture, and Agentic Leadership

Emerging Roles: M-shaped Supervisors and T-shaped Experts

The hybrid workforce model creates three distinct roles for humans who work with agentic systems:

M-shaped supervisors develop breadth through early career rotations in operations, maintenance, engineering, and quality, combined with agentic system fluency. These orchestrators lead blended human-agent teams using AI literacy, domain expertise, integrative problem-solving, and socio-emotional intelligence.

T-shaped experts offer deep domain knowledge while they teach agents how to analyze instead of doing hands-on analysis. Their vertical expertise in one field combines with horizontal collaboration skills across many others. They handle exceptions beyond agent capabilities and help improve agent performance.

AI-augmented frontline workers handle tasks that need physical presence, stakeholder interaction, or ethical judgment. Agents take care of routine aspects like diagnostics and documentation.

Agentic AI Meaning for Workforce Planning

Dynamic, activity-based models now replace traditional workforce planning based on human roles. These models specify tasks for humans, agents, or hybrid teams. By 2027, all but one of these AI job openings will stay unfilled. This makes internal development vital.

- Match technology to organizational maturity

- Predict structural changes from agentic AI

- Get leadership lined up and educated

CHROs expect to move nearly a quarter (23%) of their workforce as their organizations implement digital labor. Agent adoption will grow by 327% within two years.

Cultural Shifts: Trust, Transparency, and Purpose

Advanced agentic deployments fail without proper cultural foundations. Employee trust in independent agentic AI systems dropped 89% between May and July of 2025. This shows transparency's vital importance.

- Trust building through clear explanations of AI agent behavior

- Transparency about agent-delegated tasks

- Purpose alignment that keeps human judgment while adopting AI collaboration

Ready to design your organization's agentic leadership strategy? with our specialists to create a custom transformation roadmap that balances breakthroughs with workforce readiness.

Conclusion

Key Takeaways

- Agentic AI operates autonomously toward goals - Unlike traditional automation or generative AI, agentic systems can plan, reason, act, and adapt with minimal human intervention through observe-plan-act-refine cycles.

- Organizations are restructuring into flat, outcome-focused teams - Traditional hierarchies are being replaced by networks where 2-5 humans can supervise 50-100 specialized AI agents, enabling faster delivery and better coordination.

- Real-time governance frameworks are essential for success - 40% of agentic AI projects will fail by 2027 without proper guardrails, human accountability chains, and embedded control systems that prevent cascading failures.

- The workforce is evolving into hybrid human-AI collaboration - New roles like M-shaped supervisors and T-shaped experts are emerging, with humans focusing on strategic oversight while AI handles execution tasks.

- Cultural transformation determines implementation success - Trust, transparency, and purpose alignment are critical, as employee trust in autonomous AI systems dropped 89% in 2025, making change management crucial for adoption.

FAQs

Agentic AI operates autonomously towards defined goals, planning, reasoning, and adapting with minimal human intervention. Traditional automation, on the other hand, follows fixed rules and predetermined sequences without the ability to adjust to changing circumstances.

Organizations are shifting from traditional hierarchies to flat, outcome-focused teams. In this new structure, a small group of humans can oversee a large number of specialized AI agents, enabling faster delivery and improved cross-functional collaboration.

Two primary roles are emerging: M-shaped supervisors, who manage blended human-agent teams with broad knowledge across multiple domains, and T-shaped experts, who provide deep domain expertise while teaching agents how to analyze and improve their performance.

Organizations need to implement real-time guardrails, embedded control agents, and clear human accountability chains. They should also focus on agent lifecycle management and establish comprehensive documentation of agent decisions to maintain proper oversight.

Successful implementation requires building trust through clear explanations of AI agent behavior, maintaining transparency about which tasks are delegated to agents, and ensuring purpose alignment that preserves human judgment while embracing AI collaboration.

By Vaibhav Sharma

By Vaibhav Sharma